Introduction

Since its explosive emergence in mid-2022, generative AI has quickly captured global attention. What initially centered on the language modality has since expanded into exciting new avenues, including image, audio, and video models. Early in 2023, speculation about the technology’s potential impact on businesses across various industries grew, accompanied by exciting early adoption cases. As more developers began building solutions with these models, the general perception shifted toward the continuous emergence of newer, larger, and hopefully better versions of the most widely used models.

As we enter 2024, a key insight has emerged: deploying AI isn’t simply about adopting the latest, largest model off the shelf. While it’s common to assume that AI solutions are ready-made or that increasing model size automatically leads to better results, this approach rarely meets the specialized needs of most businesses. In reality, successful applications require AI solutions that are tailored, flexible, and efficient.

To achieve this, we turn to Compound AI Systems. Unlike single, monolithic models, compound AI systems integrate multiple specialized AI components, each optimized for a specific role. This structure ensures high customizability, adaptability, and precision, transforming AI from a general tool into a purpose-built solution. By combining smaller, interconnected AI components, businesses can achieve performance and results far beyond the scope of off-the-shelf models alone. Therefore, for optimal business impact across industries, we argue that a strategic vision should prioritize smarter system designs over simply building larger, more computationally demanding models.

Understanding Compound AI Systems

The Berkeley Artificial Intelligence Research lab (BAIR) defines a compound AI system as a system “that tackles AI tasks using multiple interacting components, including multiple calls to models, retrievers, or external tools”. For example, Retrieval Augmented Generation (RAG) system is a compound system that combines a Large Language Model (LLM), an Information Retrieval mechanism, and a vectorized database. In contrast, a generative AI model is a statistical model; for instance, an LLM predicts the next token in text based on training data.

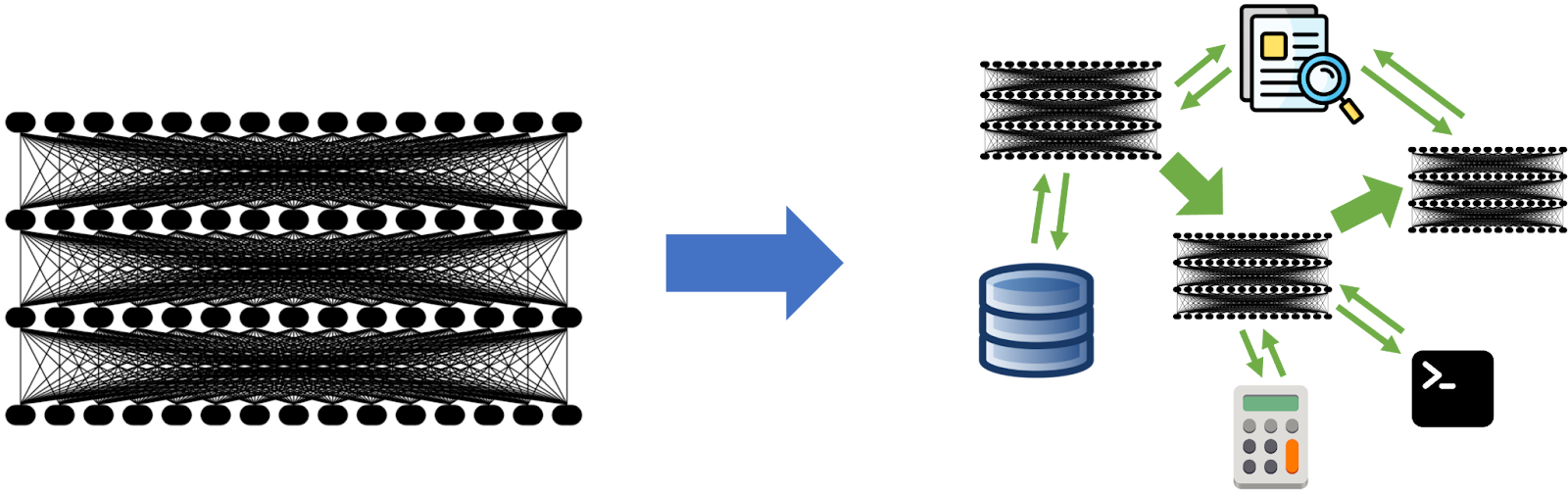

In this context, a model can be seen as a single block, while a compound AI system is more like a machine composed of multiple building blocks, each serving a specific function to achieve the system’s overall goal.

Models vs. AI Compound Systems. Source

How are Such Systems Helpful

Specialization: A Big Hammer is Not the Right Tool for Everything

When addressing specific applications or industry needs, relying on a general-purpose AI model like GPT-4 may not be sufficient. While powerful, such models are designed to handle a broad range of tasks and may lack the specialized knowledge required for particular applications, leading to diminishing returns beyond a certain point.

For instance, a financial institution seeking to develop a chatbot for investment analysis or wealth management needs a system that incorporates both specialized knowledge and enterprise-specific expertise. Given the nature of the industry, there would be concerns around privacy (the firm may require on-premise solutions and exclusive use of open models), accuracy (the solutions must be impeccably accurate), and efficiency. Using even the most powerful language models as a stand-alone solution would definitely not be the optimal choice. Instead, a compound AI system could be highly effective by integrating multiple specialized components, such as Retrieval-Augmented Generation (RAG) systems and tailored AI agents. This approach ensures that each part of the system is optimized for its specific role.

Flexibility: Modular Systems Adapt to Changing Needs with Ease

When a system is built using modular components, replacing or upgrading individual parts becomes much simpler. The same principle applies to compound AI systems, which are built from multiple blocks. If a component within a compound AI solution becomes outdated or fails to meet new compliance requirements, it can be replaced without the need for a complete overhaul of the entire system. For example, if a new, more suitable model becomes available, it can be integrated into the system to replace the older version. Similarly, if a more efficient information retrieval mechanism is developed, it can be swapped in without disrupting the entire setup. This flexibility extends beyond models and retrieval systems to other components, such as data processing units, analytics engines, or compliance modules.

Scalability: Swarms of Intelligent Components Outshine a Single Giant

The modular nature of compound AI systems offers significant advantages in scalability. By allowing individual components to be scaled independently, these systems can efficiently manage increasing data volumes and complexity without requiring a complete overhaul.

A system can be scaled by replicating it into a network of systems, theoretically allowing for infinite scalability. This is why a single language model, no matter how large or powerful it is (as of today), cannot effectively search a very large database for a specific piece of information. To scale up a model’s search capabilities, you will inevitably need to create a multi-component system to enhance the search function. If even simplest tasks, like information retrieval, cannot be effectively scaled by a single model, it becomes clear that individual components, on their own, cannot support large-scale, complex applications.

Why Compound AI Systems Make Business Sense

From a business perspective, adopting compound AI systems goes beyond technical sophistication – it provides strategic advantages that directly align with business goals. One could even argue that if a business wishes to leverage generative AI, it has no choice but to build (or purchase) a compound system. While this may seem straightforward, it challenges the common business assumption that standalone, off-the-shelf models are sufficient to meet specialized demands.

Enhanced Customer Satisfaction

The most advanced AI models, on their own, cannot create a personalized experience. This can only be achieved through a compound system that enables the delivery of highly tailored and contextually relevant customer experiences. For example, Microsoft’s Custom Neural Voice combines general LLMs with custom voice training, allowing brands to create digital assistants that precisely align with their unique tone and style. This level of customization is particularly powerful in customer-facing industries, such as advertising, where customers respond positively to feeling special and understood. From a business perspective, combining this technology with the ability to add context results in personalized outcomes, ultimately driving customer satisfaction.

Cost Efficiency

Unlike individual models that provide a fixed level of quality at a fixed cost, compound AI offers flexible cost-quality configurations. For example, businesses can integrate a smaller, instruction-tuned model with specialized components, such as search heuristics, to achieve high-quality results at a lower cost compared to larger, standalone models. This flexibility enables the use of smaller, potentially open-source models that, with targeted engineering, can deliver results comparable to more expensive solutions.

Better Control and Trust

For businesses, reliability and trustworthiness in AI outputs are crucial. Relying solely on individual models can make it challenging to achieve consistently factual, well-formatted results. For example, a previous client in the educational sector once requested a solution for automatically filling out applications based on their school data and information. Initially, I spent months crafting a sequential system based on advanced prompt engineering, without using a compound approach. Results improved, they were never close enoughto what we could present as fully completed applications. It was only when the concept of RAG was introduced that fully controlled results started to emerge. Yet, even RAG alone wasn’t enough; additional components were required to categorize information, maintain context coherence, and handle other nuances. Only then did we achieve the reliability and precision the client needed.

Conclusion

Examining the current landscape of AI in industrial applications reveals a clear trend: relying on a single model to perform complex functions often proves unreliable. As use cases become more intricate and enterprise adoption grows, the demand for highly specialized and capable AI solutions is set to increase. To meet this demand, one must orchestrate a solution architecture that incorporates enhanced and specialized models, avoiding the pitfall of having a narrow, unilateral scope.

The developer community is abuzz with exciting applications spanning fields from medicine to retail, all built by assembling smaller, specialized components into powerful, tailored solutions.

Even AI, on its own, is not smart enough to achieve strategic business objectives. It must be supplemented by a higher form of orchestrated intelligence.

Appendix

Examples of Compound AI Systems

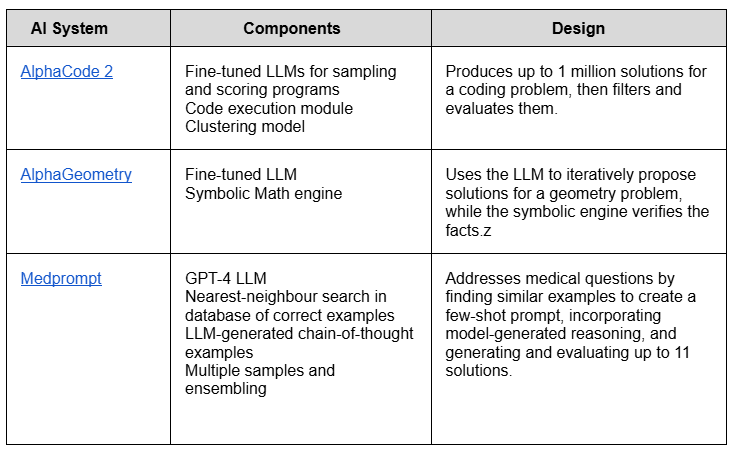

The following is a collection of impactful and interesting compound AI systems that highlight the usefulness of this concept. Regardless of the infrastructure developers are using, the goal is to observe how combining several AI components with other tools can achieve a very specific purpose.

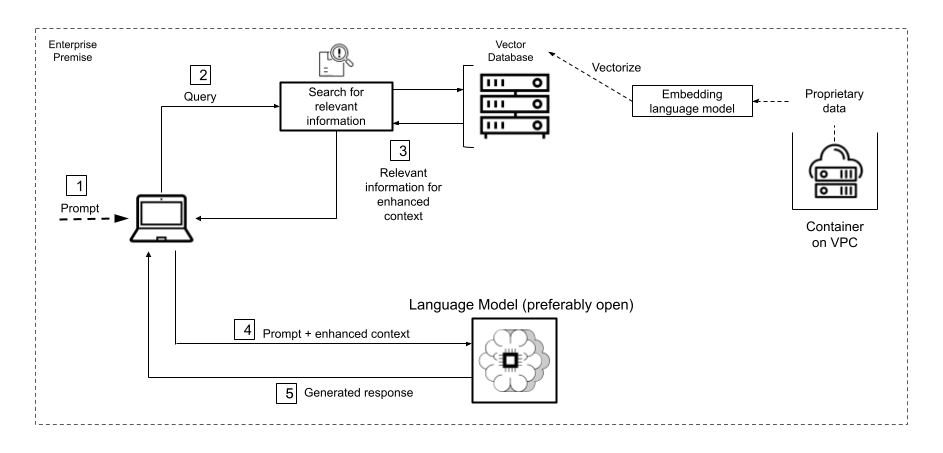

RAG enhances the output of a LLM by providing specific context obtained from a vectorized database that lies outside the model’s original training data. While LLMs are trained on vast datasets and leverage billions of parameters to generate responses, RAG takes this a step further. It enables the LLM to access and reference specific, up-to-date information, whether it’s domain-specific or drawn from an organization’s internal knowledge base. This process significantly improves the relevance, accuracy, and usefulness of the generated content, all without the need to retrain the model.

Enterprises with large datasets that require an efficient method for organizing internal knowledge can deploy this solution on-premise, using the model of their choice, to retrieve precise pieces of information. For instance, financial analysts can quickly locate relevant data within historical reports without the need to manually sift through each one. The model, enhanced by this contextual information, also generates more accurate and useful responses, streamlining the entire information retrieval process.

The following is a typical RAG architecture:

General RAG Architecture

The following is a table of some common compound AI systems (source):

BLOG

BLOG