Auriau, Vincent, Ali Aouad, Antoine Désir, and Emmanuel Malherbe. “Choice-Learn: Large-scale choice modeling for operational contexts through the lens of machine learning.” Journal of Open Source Software 9, no. 101 (2024): 6899.

Introduction

Discrete choice models aim at predicting choice decisions made by individuals from a menu of alternatives, called an assortment. Well-known use cases include predicting a commuter’s choice of transportation mode or a customer’s purchases. Choice models are able to handle assortment variations, when some alternatives become unavailable or when their features change in different contexts. This adaptability to different scenarios allows these models to be used as inputs for optimization problems, including assortment planning or pricing.

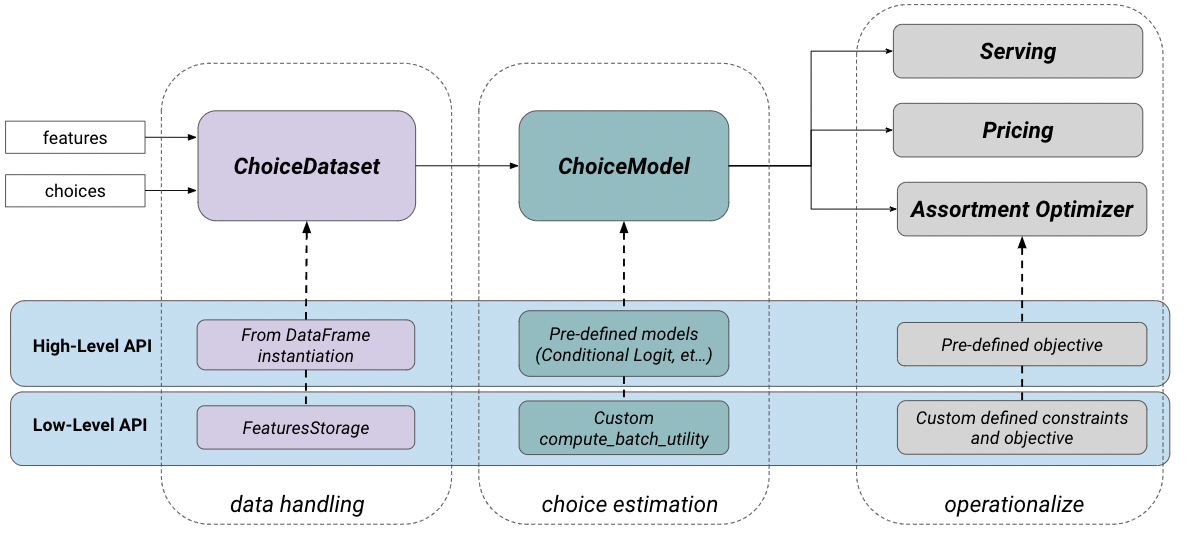

Choice-Learn provides a modular suite of choice modeling tools for practitioners and academic researchers to process choice data, and then formulate, estimate and operationalize choice models. The library is structured into two levels of usage, as illustrated in Figure 1. The higher-level is designed for fast and easy implementation and the lower-level enables more advanced parameterizations. This structure, inspired by Keras’ different endpoints (Chollet et al., 2015), enables a user-friendly interface. Choice-Learn is designed with the following objectives:

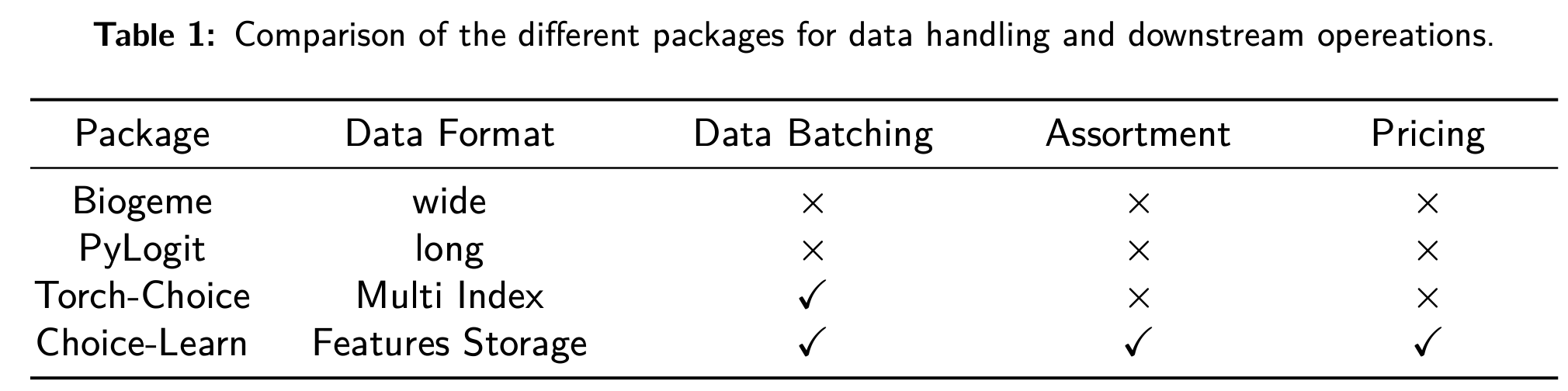

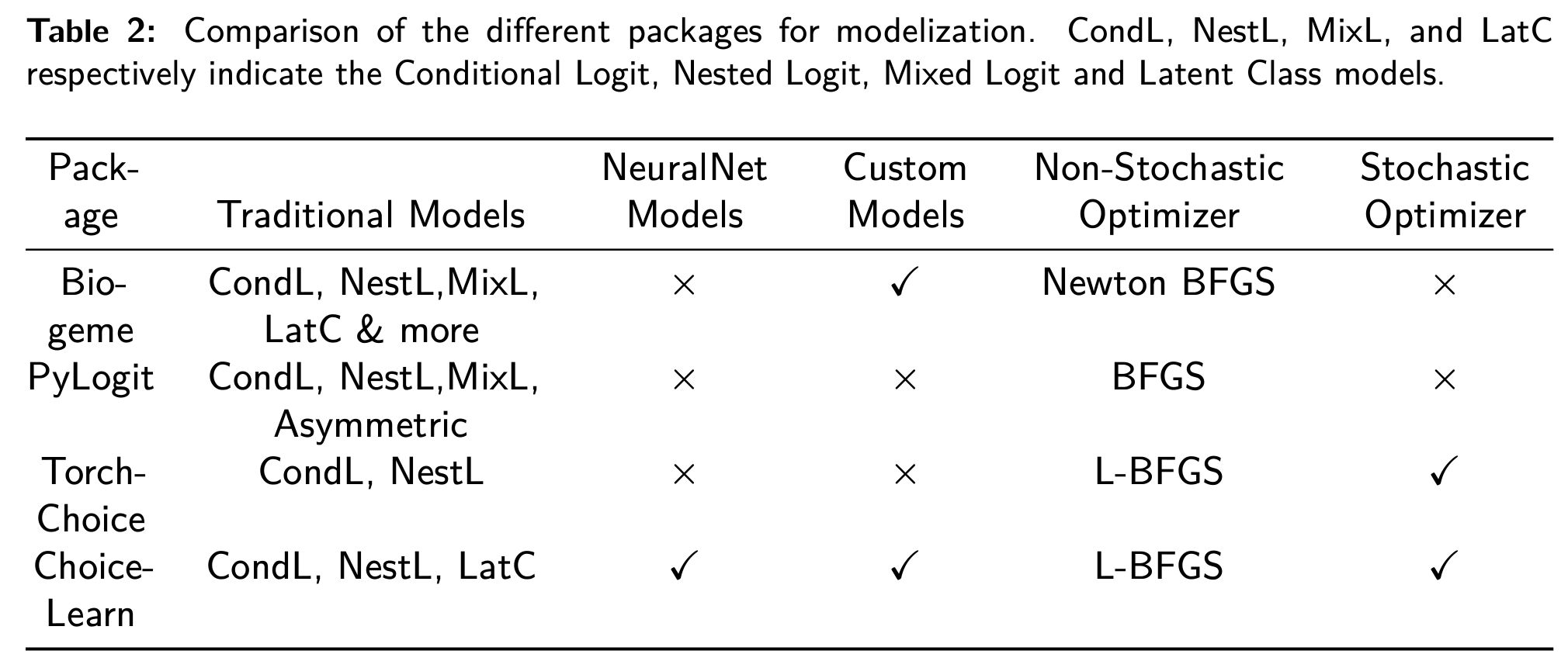

The main contributions are summarized in Tables 1 and 2.

Statement of need

Data and model scalability

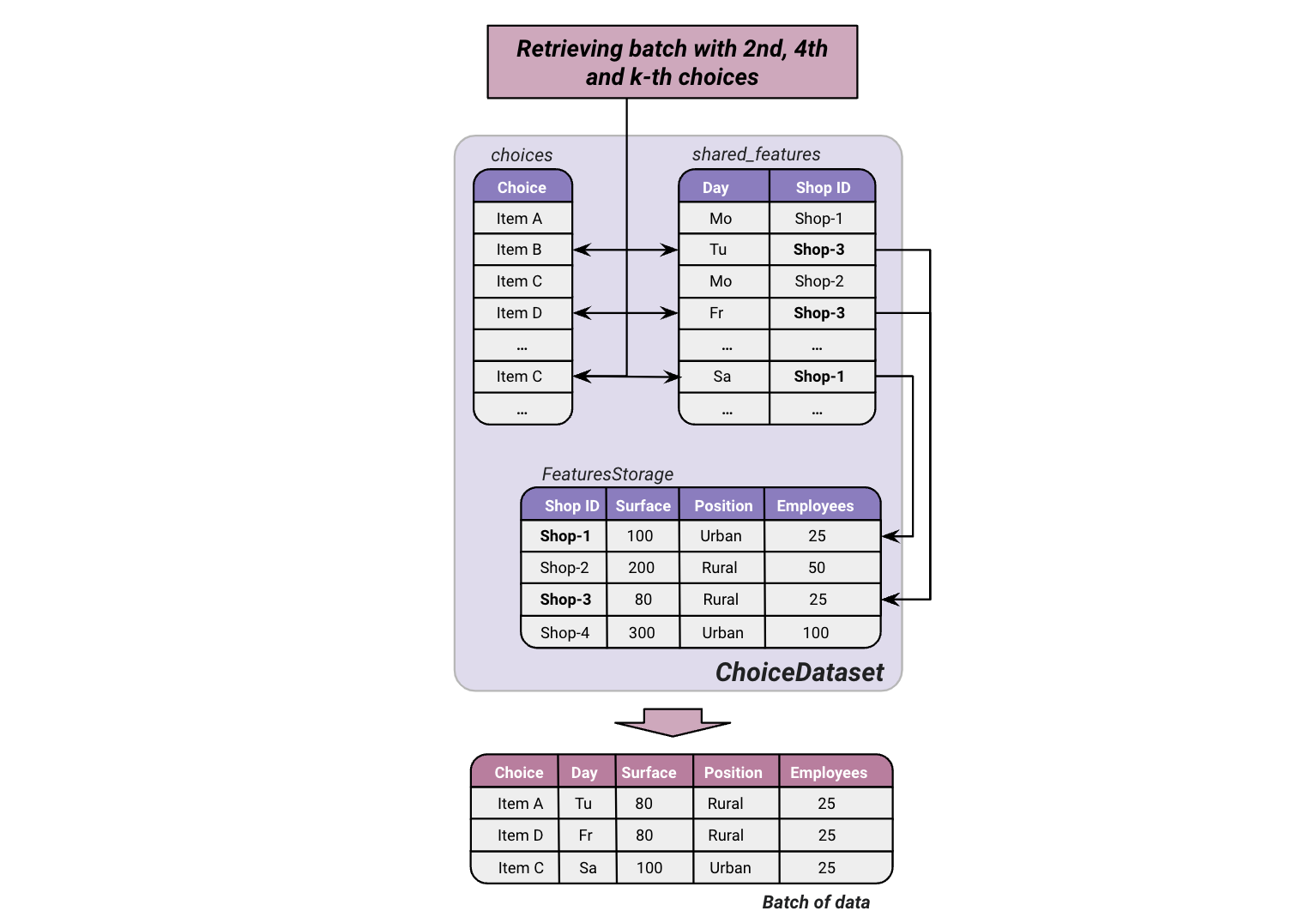

Choice-Learn’s data management relies on NumPy (Harris et al., 2020) with the objective of limiting the memory footprint. It minimizes the repetition of items or customers features and defers the jointure of the full data structure until processing batches of data. The package introduces the FeaturesStorage object, illustrated in Figure 2, that allows feature values to be referenced only by their ID. These values are substituted to the ID placeholder on the fly in the batching process. For instance, supermarkets features such as surface or position are often stationary. Thus, they can be stored in an auxiliary data structure and in the main dataset, the store where the choice is recorded is only referenced with its ID.

The package stands on Tensorflow (Abadi et al., 2015) for model estimation, offering the possibility to use fast quasi-Newton optimization algorithm such as L-BFGS (Nocedal & Wright, 2006) as well as various gradient-descent optimizers (Kingma & Ba, 2017; Tieleman & Hinton, 2012) specialized in handling batches of data. GPU usage is also possible, which can prove to be time-saving. Finally, the TensorFlow backbone ensures an efficient usage in a production environment, for instance within an assortment recommendation software, through deployment and serving tools, such as TFLite and TFServing.

Flexible usage: From linear utility to customized specification

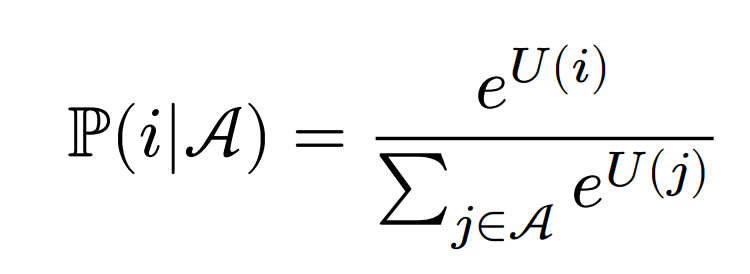

Choice models following the Random Utility Maximization principle (McFadden & Train, 2000) define the utility of an alternative 𝑖 ∈ 𝒜 as the sum of a deterministic part 𝑈 (𝑖) and a random error 𝜖𝑖. If the terms (𝜖𝑖)𝑖∈𝒜 are assumed to be independent and Gumbel-distributed, the probability to choose alternative 𝑖 can be written as the softmax normalization over the available alternatives 𝑗 ∈ 𝒜:

The choice-modeler’s job is to formulate an adequate utility function 𝑈 (.) depending on the context. In Choice-Learn, the user can parametrize predefined models or freely specify a custom utility function. To declare a custom model, one needs to inherit the ChoiceModel class and overwrite the compute_batch_utility method as shown in the documentation.

Library of traditional random utility models and machine learning-based models

Traditional parametric choice models, including the Conditional Logit (Train et al., 1987), often specify the utility function in a linear form. This provides interpretable coefficients but limits the predictive power of the model. Recent works propose the estimation of more complex models, with neural networks approaches (Aouad & Désir, 2022; Han et al., 2022) and tree-based models (Aouad et al., 2023; Salvadé & Hillel, 2024). While existing choice libraries (Bierlaire, 2023; Brathwaite & Walker, 2018; Du et al., 2023) are often not designed to integrate such machine learning-based approaches, Choice-Learn proposes a collection including both types of models.

Downstream operations: Assortment and pricing optimization

Choice-Learn offers additional tools for downstream operations, that are not usually integrated in choice modeling libraries. In particular, assortment optimization is a common use case that leverages a choice model to determine the optimal subset of alternatives to offer customers maximizing a certain objective, such as the expected revenue, conversion rate, or social welfare. This framework captures a variety of applications such as assortment planning, display location optimization, and pricing. We provide implementations based on the mixed-integer programming formulation described in (Méndez-Díaz et al., 2014), with the option to choose the solver between Gurobi (Gurobi Optimization, LLC, 2023) and OR-Tools (Perron & Furnon,2024).

Memory usage: a case study

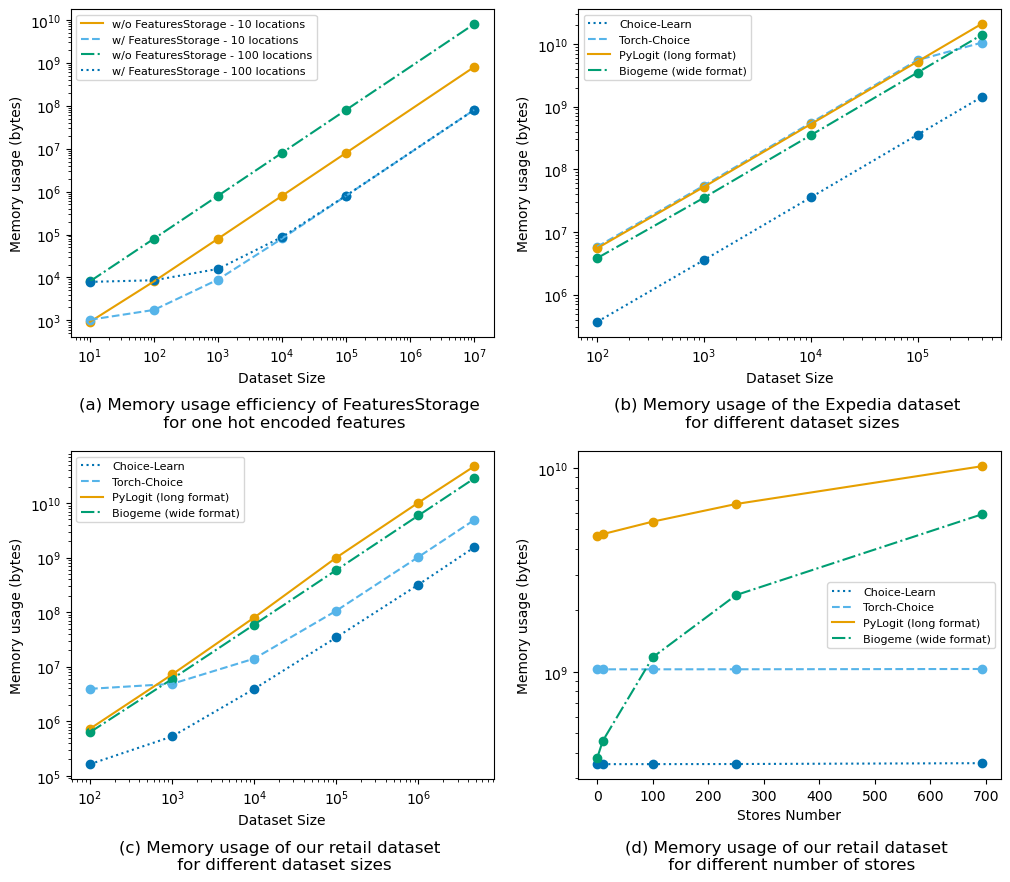

We provide in Figure 3 (a) numerical examples of memory usage to showcase the efficiency of the FeaturesStorage. We consider a feature repeated in a dataset, such as a one-hot encoding for locations, represented by a matrix of shape (#locations, #locations) where each row refers

to one location.

We compare four data handling methods on the Expedia dataset (Ben Hamner et al., 2013): pandas.DataFrames (The pandas development team, 2020) in long and wide format, both used in choice modeling packages, Torch-Choice and Choice-Learn. Figure 3 (b) shows the

results for various sample sizes.

Finally, in Figure 3 (c) and (d), we observe memory usage gains on a proprietary dataset in brick-and-mortar retailing consisting of the aggregation of more than 4 billion purchases in Konzum supermarkets in Croatia. Focusing on the coffee subcategory, the dataset specifies, for each purchase, which products were available, their prices, as well as a one-hot representation of the supermarket.

BLOG

BLOG