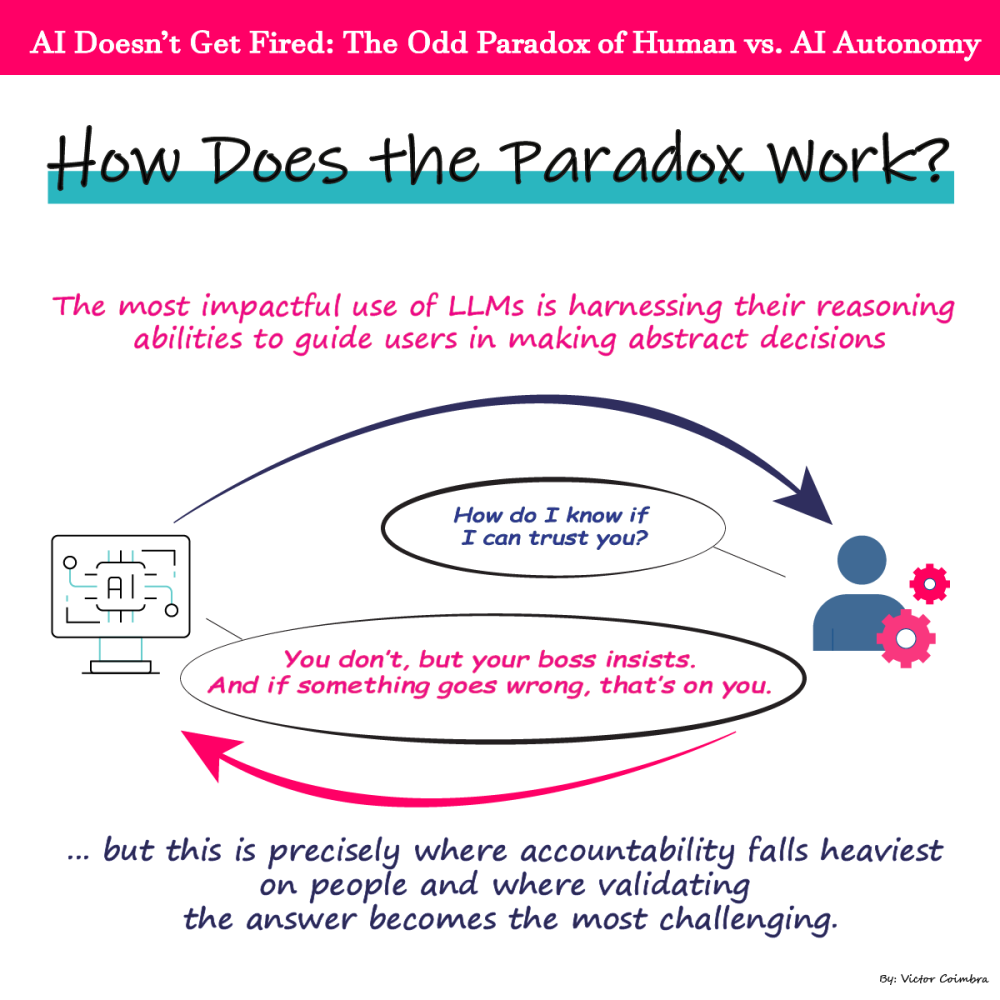

If your boss asks you for a critical number, and you use your company’s “Ultra Secure Internal GPT” to retrieve the information—only for the result to be incorrect—whose fault is it? The answer, as we all know, is yours for failing to validate the output. This creates a paradox: while companies push AI to accelerate decision-making, they place ultimate responsibility for AI-driven outcomes on humans. The question then becomes: What is the sweet spot between AI autonomy and human oversight?

Historically, we’ve viewed machines as binary tools—right or wrong (e.g., a calculator claiming 1+1=3 would immediately be deemed broken). But AI operates on probabilities, much like human reasoning. This demands a mindset shift: we must stop treating AI outputs as definitive answers and start treating them as inputs to a broader decision-making process.

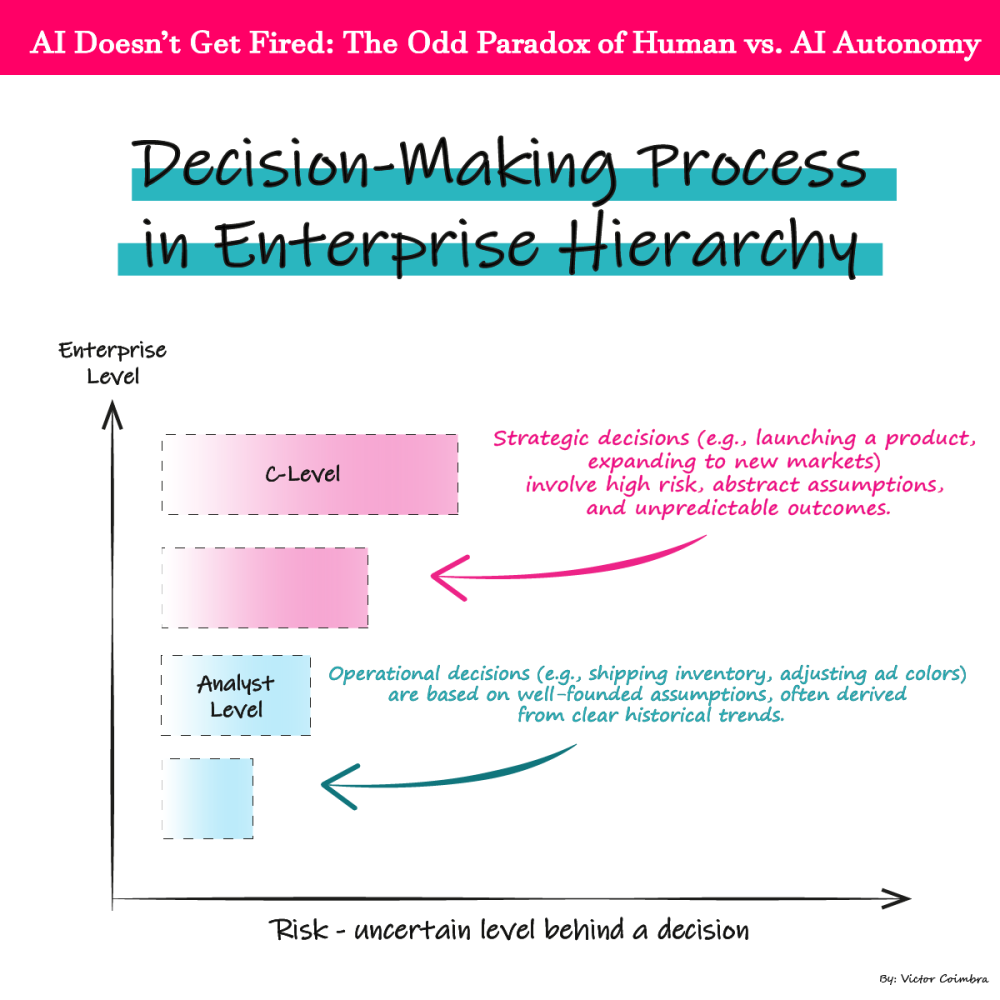

To refine this balance, we need to dissect how enterprises approach decisions. It all hinges on one word: risk—specifically, the financial impact of being wrong. Organizational hierarchies reflect this clearly:

- Operational decisions (e.g., shipping inventory, adjusting ad colors) carry low risk and reversible costs.

- Strategic decisions (e.g., launching a product, expanding to new markets) involve high risk, abstract assumptions, and unpredictable outcomes.

Humans follow the same risk-based logic. The more abstract and uncertain the assumptions behind a decision, the higher the organizational rank required to approve it. A CEO’s choices (e.g., predicting market shifts or consumer behavior) rely on chaotic, ambiguous data, while a manager’s inventory calculations use concrete historical trends.

Translating this to AI: The level of human accountability should correlate with the abstraction of the data and assumptions behind the AI’s output. For example:

- Low abstraction (e.g., demand forecasting using sales history): Minimal human oversight.

- High abstraction (e.g., market-entry strategies using sentiment analysis): Human judgment is non-negotiable.

The Double Paradox: AI’s greatest strength—navigating ambiguity—is also its greatest liability. Its true value emerges in high-stakes, uncertain decisions where humans must process vast amounts of data. Yet, paradoxically, this is also where humans must trust probabilistic reasoning the most, even as accountability rests most heavily on them—creating an inherent tension:

- On one hand, AI excels in chaotic scenarios (e.g., predicting consumer trends in a volatile market) because it processes vast datasets humans can’t.

- On the other hand, humans bear sole responsibility for decisions in those same scenarios, even though the data driving them is inherently unstable.

The unique power of Large Language Models (LLMs) lies in their ability to simulate reasoning—not just automate tasks. If you want simple “if-this-then-that” logic, cheaper tools exist. LLMs thrive where ambiguity reigns: they parse unstructured data, infer context, and generate probabilistic pathways that mimic human intuition.

This paradox forces a critical question: If humans remain accountable, why use AI in high-risk scenarios at all? The answer lies in reframing AI’s role. It’s not about outsourcing decisions—it’s about shrinking the unknown. AI doesn’t eliminate risk; it gives humans a structured way to interrogate chaos.

How to Leverage AI Without Surrendering Agency?

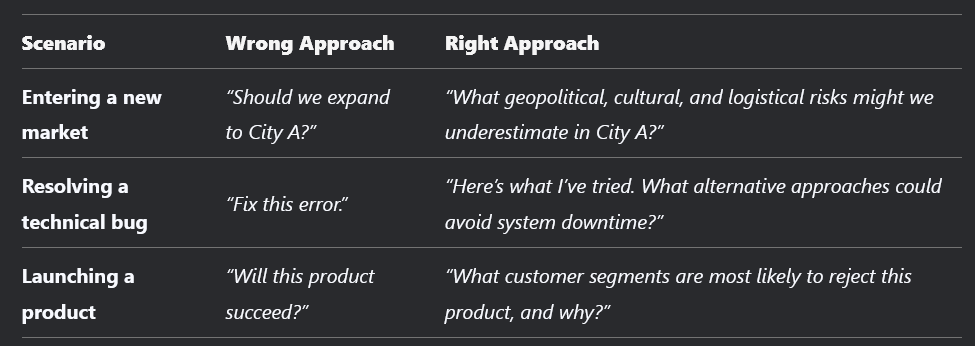

The key is to treat AI as a collaborative challenger, not a decision-maker. For example:

The pattern? AI thrives when humans ask it to challenge assumptions, not confirm them. This shifts the mindset from “What’s the answer?” to “What are we missing?”

The paradox is clear: AI’s value grows with ambiguity, but so does human accountability. The sweet spot isn’t about balancing autonomy—it’s about redefining collaboration. Use AI to map the minefield of uncertainty, but let humans choose the path.

If companies fail to train employees for this shift, the paradox will escalate. Teams will resent AI’s outputs, doubting every answer while bearing full responsibility for outcomes. Organizations will stagnate, wasting time debating whether to “trust” AI instead of leveraging it to accelerate decisions. Worse, they’ll fall behind competitors who embrace a simple truth: The question of “Can we trust AI?” is irrelevant—AI isn’t accountable, and we’ll never truly know if it’s “right” or “wrong.”

The solution? Normalize AI as a risk-revealing tool of probabilistic outcomes:

- Reward curiosity and scenario simulations, not answers carved in stone.

- Decouple AI from blame. Label its outputs as “input” (not “advice”), freeing humans to critique without defensiveness.

- Train for probabilistic thinking. Teach employees to interpret confidence intervals, scenario ranges, and bias flags—not just “yes/no” outputs.

When AI answers go unvalidated, humans get fired. When humans learn to wield AI’s probabilistic power, companies turn uncertainty into strategy.

BLOG

BLOG