How we automated the management of an account with more than 50 users while complying with data governance standards

More and more companies put Snowflake at the heart of their data platforms. Even if it is a managed solution, you still have to administrate the environment. It can be a challenge, especially for large companies.

One of the challenges is access control. It is a critical piece of any data governance program. Snowflake provides out-of-the-box features to help tackle this challenge. But when you have dozens of users and terabytes of data, built-in features are not enough. You need to think about a strategy to manage your account.

We have been there. For one of our clients, we managed an account with more than 50 active users. We designed a solution to scale access control management.

This article will describe the key learnings of the past year.

Initial situation

Before we joined the company, the account was set up and a lot of good ideas had been implemented. Custom roles had been created. Users were managed carefully. But there were a few limitations:

We created a new system to manage access control and fight against those shortcomings.

Access control framework design

First, we revoked all default role grants (SYSADMIN, USERADMIN …) to users … Only a few people were able to assume those roles. They were the people in charge of the administration.

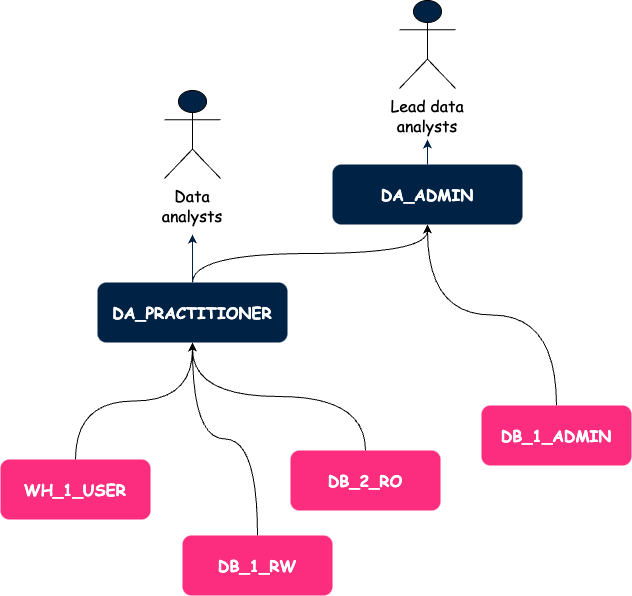

Second, we created a framework of access control based on custom roles. We defined two types of custom roles:

We chose to define access roles at the database level. It makes it easy to replicate the privileges from one environment to another using zero-copy cloning. It was also a trade-off between the flexibility we grant to end users and the strict application of the least privilege principle.

At the database level, we defined 3 levels of access roles:

We applied a similar strategy to warehouse access with two types of roles:

Below is an illustration of our framework. Arrows represent grants.

At this point, we had a better idea of how we would manage permissions but we also had issues with user management.

User management

We set up Single Sign On to allow Snowflake users to sign in via Azure Active Directory (AAD). Thus, we removed the complexity of maintaining two databases of users and the offboarding process was more robust. Indeed, we only had to disable the user in AAD and the deletion was automatically replicated to Snowflake.

As there is a mapping between AD groups and roles in Snowflake, we were able to grant a role to each user we create. We follow the same process for each new user.

It is the process for real human users, but it does not address one of the limitations mentioned at the beginning of this article: the use of personal credentials for automated jobs.

That is why we introduced service accounts. Service account management was very similar to what we just described. The only difference is that we create a functional role for each service account. There is a one-to-one relationship between service accounts and their roles. Thus, we strictly limit the scope of permissions for each service account.

Those steps were big improvements. Everything was documented. Teams rapidly adopted the new framework. We were happy.

But, we were still spending a lot of time granting access manually, and as it was very manual, it was also error-prone. The need for a tool to automate those tasks was clear.

Automation to the rescue

We had a few options:

We finally created a CLI in Python.

We prefer using Terraform to deploy infrastructure and only infrastructure. Undesired behaviors can happen when you start managing your users and your permissions with terraform. For example, secret rotation is very hard to manage.

We like bash but only for ad-hoc and simple operations. Here, we need to load config files, interact with the Snowflake API, and manipulate data structures. This is possible but it would be hard to maintain in the long run.

In addition to this aspect, we needed reliability. One way to achieve this is to write tests. This is easier to do in programming languages such as Python.

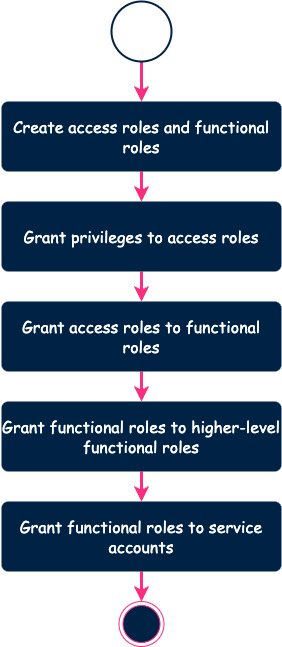

When you execute the tool, here is what happens behind the scenes.

By design, the tool does not create a role that already exists. Same for privileges. The tool computes the difference between the configuration and the remote environment and applies the necessary changes. It allows us to avoid any downtime.

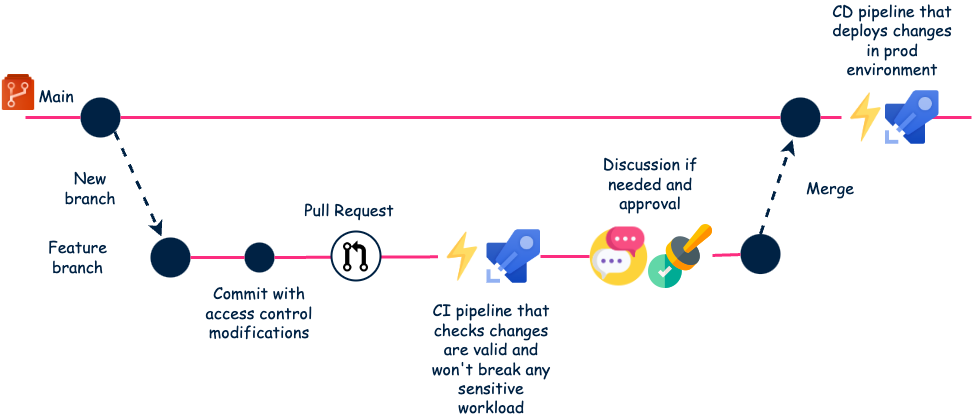

We initially ran this tool locally. But this could lead to issues. For example, we could have conflicts between two engineers trying to make changes at the same time. That is why we set up a workflow based on the CI/CD capabilities of Azure DevOps (ADO).

Note: we were using ADO but you can do the same with GitHub, GitLab or Bitbucket.

Here is the final process.

This fully automated workflow works very well. Tests are catching defaults early in the CI pipeline. And mandatory review is also a way of preventing incidents.

Also, pull requests serve as a kind of documentation of all demands.

Conclusion

It has been a bit less than a year since we started using this solution. Although it required an initial time investment for the implementation, it was totally worth it.

We now spend more time discussing with the users about their requests and not on the actual implementation. Most of the requests can be resolved in about 10 lines of YAML. This is very efficient and it scales well. We still welcome new users and we can face the demand. Also, we resolved the initial issues. So, it was a success!

Thanks to Samy Dougui who reviewed this article and worked with me on the design of this solution

BLOG

BLOG