5 February 2021

In this article, Data Scientist Maxime Lutel sums up his learnings from the M5 sales forecasting competition, which consisted in predicting future sales in several Walmart stores. He will walk you through our solution and discuss what machine learning model worked the best for this task.

Using machine learning to solve retailers’ business challenges

Accurate sales forecasting is critical for retail companies to produce the required quantity at the right time. But even if avoiding waste and shortage is one of their main concerns, retailers still have a lot of room for improvement. At least, that’s what people working at Walmart think, as they launched an open data science challenge in March 2020— the M5 competition — to see how they could enhance their forecasting models.

The competition aimed at predicting future sales at the product level, based on historical data. More than 5000 teams of data lovers and forecasting experts have discussed for months about the methods, features and models that would work best to address this well-known machine learning problem. These debates highlighted some recurring issues encountered in almost all forecasting projects. And even more importantly, they brought out a wide variety of approaches to tackle them.

This article aspires to summarize some key insights that emerged from the challenge. At Artefact, we believe in learning by doing, so we decided to take a shot and code our own solution to illustrate it. Now let’s go through the whole forecasting pipeline and stop along the way to understand what worked and what failed.

Problem statement: Hierarchical times series forecasting

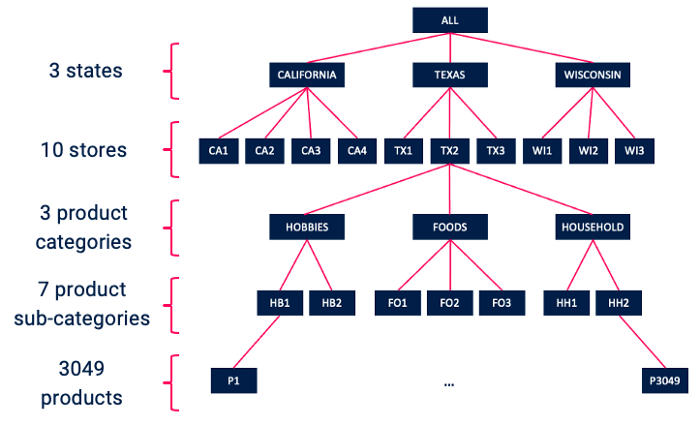

The dataset contains 5-year historical sales, from 2011 to 2016, for various products and stores. Some additional information is provided, such as sell prices and calendar events. Data is hierarchically organized: stores are divided into 3 states, and products are grouped by categories and sub-categories.

Our task is to predict sales for all products in each store, on the days right after the available dataset. It means that 30 490 forecasts have to be made for each day in the prediction horizon.

This hierarchy will guide our modeling choices, because interactions within product categories or stores contain very useful information for prediction purposes. Indeed, items in the same stores and categories might have similar sales evolution, or on the contrary they could cannibalize each other. Therefore, we are going to describe each sample by features that capture these interactions, and prioritize machine learning based approaches over traditional forecasting ones, to consider this information when training the model.

Two main challenges: intermittent values and an extended prediction horizon

At this stage, you might think that it is a really common forecasting problem. You’re right and that’s why it is interesting: it can relate to a wide range of other projects, even if each industry has its own characteristics. However, this challenge has 2 important specificities that will make the task more difficult than expected.

The first one is that the time series we are working with have a lot of intermittent values, i.e. long periods of consecutive days with no sales, as illustrated on the plot below. This could be due to stock-outs or limited shelves’ area in stores. In any case, this complicates the task, since the error will skyrocket if sales are predicted at a regular level while the product is out of shelves.

The second one comes from the task itself, and more precisely from the size of the prediction horizon. Competitors are required to generate forecasts not only for the next week, but for a 4-week period. Would you rather rely on the weather forecast for the next day or for 1 month from now? The same goes for sales forecasting: an extended prediction horizon makes the problem more complex as uncertainty increases with time.

Feature engineering — Modeling sales’ driving factors

Now that we have understood the task at hand, we can start to compute features modeling all phenomenons that might affect sales evolution. The objective here is to describe each triplet Day x Product x Store by a set of indicators that capture the effects of factors such as seasonality, trends or pricing.

Seasonality

Rather than using the sales date directly as a predictor, it is usually more relevant to decompose it into several features to characterize seasonality: year, month, week number, day of the week… The latter is particularly insightful because the problem has a strong weekly periodicity: sales volumes are bigger on the weekends, when people spend more time in supermarkets.

Calendar events such as holidays or NBA finals also have a strong seasonal impact. One feature has been created for each event, with the following values:

The idea is to model the seasonal impact not only on the D-day, but also before and after. For example, a product that will be offered a lot as a Christmas present will experience a sales peak on the days before and a drop right after.

Trends

Recent trends also provide useful information on future sales and are modeled thanks to lag features. A lag is the value of the target variable shifted by a certain period. For any specific item in a given store, the 1-week lag value would be the sales made one week ago for this particular item and store. Different shift values can be considered, and the average of several lags is computed as well, to get more robust predictors. Lags can also be calculated on aggregated sales to capture more global trends, for example at the store level or at the product category level.

Pricing

A product’s price can change from one store to another, and even from one week to another within the same store. These variations strongly influence sales and should therefore be described by some features. Rather than absolute prices, relative price differences between relevant products are more likely to explain sales evolutions. That’s why the following predictors have been computed:

Categorical variables encoding

Categorical variables such as the state, the store, the product name or its category also hold a significant predictive power. This information has to be encoded into features to help the model leveraging the dataset hierarchy. One-hot encoding is not an option here because some of these categorical variables have a very high cardinality (3049 distinct products). Instead, we have used an ordered target encoding, which means that each observation is encoded by the average sales of past observations having the same categorical value. The dataset is ordered by time for this task to avoid data leakage.

All categorical variables and some of their combinations have been encoded with this method. This results in very informative features, the best one being the encoding of product and store combination. If you wish to experiment other encoders, you can find a wide range of methods here.

Tweedie loss to handle intermittent values

Different possible strategies can be used to deal with the intermittent values issue. Some participants decided to create 2 separate models: one to predict whether or not the product will be available on a specific day, and a second one to forecast sales. Like many others, we have chosen another option, which is to rely on an objective function adapted to the problem: the tweedie loss.

Without going into the mathematical details, let’s try to understand why this loss function is appropriate for our problem, by comparing sales distribution in the training data and the tweedie distribution:

They look quite similar and both have values concentrated around 0. Setting the tweedie loss as an objective function will basically force the model to maximize the likelihood of that distribution and thus predict the right amount of 0s. Besides, this loss function comes with a parameter — whose values are ranging from 1 to 2 — that can be tuned to fit the distribution of the problem at hand:

Based on our dataset distribution, we can expect the optimal value to be between 1 and 1.5, but to be more precise we will tune that parameter later with cross-validation. This objective function is also available for other gradient boosting models such as XGBoost or CatBoost, so it’s definitely worth trying if you’re dealing with intermittent values.

How to forecast 28 days in advance?: Making the most out of lag features

As explained above, lag features are sales shifted by a given period of time. Thus, their values depend on where you stand in the forecasting horizon. The sales made on a particular day D can be considered as a 1-day lag if you’re predicting one day ahead, or as a 28-day lag if you’re predicting 28 days ahead. The following diagram illustrates this point:

This concept is important to understand what features will be available at prediction time. Here, we are on day D and we would like to forecast sales for the next 28 days. If we want to use the same model — and thus the same features — to make predictions for the whole forecasting horizon, we can only use lags that are available to predict all days between D+1 and D+28. This means that if we use the 1-day lag feature to train the model, that variable will also have to be filled for predictions at D+2, D+3, … and D+28, whereas it refers to dates in the future.

Still, lags are probably the features with the biggest predictive power, so it’s important to find a way to make the most out of this information. We have considered 3 options to get around this problem, let’s see how they performed.

Option 1: One model for all weeks

The first option is the most obvious one. It consists in using the same model to make predictions for all weeks in the forecasting horizon. As we just explained, it comes with a huge constraint: only features available for predicting at D+28 can be used. Therefore, we have to get rid of all the information given by the 27 most recent lags. It is a shame as the most recent lags are also the most informative ones, so we have considered another option.

Option 2: Weekly models

This alternative consists in training a different LightGBM model for each week. On the diagram above, every model is learning from the most recent possible lags with respect to the constraint imposed by its prediction horizon. Following the same logic as the previous option, it means that each model can leverage all lags except those that are newer than the farthest day to predict.

More precisely:

This method allows us to better capitalize on lag information for the first 3 weeks and thus improved our solution’s forecast accuracy. It was worth it because it was a Kaggle competition, but for an industrialized project, questions of complexity, maintenance and interpretability should also come into consideration.

Indeed, this option could be computationally expensive and if we are aiming at a rollout on a whole country scale, it would require maintaining hundreds of models in live. In that case, it would be necessary to evaluate if the performance increment is large enough to justify this more complex implementation.

Option 3: Recursive modeling

The last option also uses weekly models, but this time in a recursive way. Recursive modeling means that predictions generated for a given week will be used as lag features for the following weeks. This happens sequentially: we first make forecasts for the first week by using all lags except the 6 most recent ones. Then, we predict week 2 by using our previous predictions as 1-week lags, instead of excluding more lags like in option 2. By repeating the same process, we always get recent lags available, even for weeks 3 and 4, which allows us to leverage this information to train the models.

This method is worth testing, but keep in mind that it is quite unstable as errors spread from week to week. If the first week model makes important errors, these errors will be taken as the truth by the next model, which will then inevitably be poorly performing, and so on. That’s why we decided to stick with option 2, that seems to be more reliable.

Ensuring model robustness with an appropriate cross-validation: Why cross-validation is critical for time series

In any machine learning project, adopting an appropriate cross-validation strategy is critical to simulate correctly out-of-sample accuracy, select hyper-parameters thoroughly and avoid over-fitting. When it comes to forecasting, this has to be done carefully because there is a temporal dependency between observations that must be preserved. In other words, we want to prevent the model from looking into the future when we train it.

The validation period during which the model is tested also has a greater importance when dealing with time series. Model performance and the optimal set of hyper-parameters can vary a lot depending on the period over which the model is trained and tested. Therefore, our objective is to find which parameters are the most likely to maximize performance not over a random period, but over the period that we want to forecast, i.e. the next 4 weeks.

Adapting the validation process to the problem at hand

To achieve that goal, we have selected 5 validation sets that were relevant to the prediction period. The diagram below shows how they are distributed over time. For each cross-validation fold, the model is trained with various combinations of parameters on the training set and evaluated on the validation set using the root mean squared error.

Folds 1, 2 and 3 aim at identifying parameters that would have maximized performance over recent periods, basically over the last 3 months. The problem is that these 3 months might have different specificities than the upcoming period that we are willing to forecast. For example, let’s imagine that stores launched a huge promotional season over the last few months, and that it just stopped today.

These promotions would probably impact the model’s behavior, but it would be risky to rely only on these recent periods to tune it because this is not representative of what is going to happen next.

To mitigate this risk, we have also included folds 4 and 5, which correspond to the forecasting period respectively shifted by 1 and 2 years. These periods are likely to be similar because the problem has a strong yearly seasonality, which is often true in retail. In case we had a different periodicity, we could choose any cross-validation strategy that has more business sense. In the end, we have selected the hyper-parameters’ combination with the lowest error over the 5 folds to train the final model.

Results

The different techniques mentioned above allowed us to reach a 0.59 weighted RMSSE — the metric used on Kaggle — which is equivalent to a weighted forecast accuracy of 82.8%. The chart below sums up the incremental performance generated by each step:

These figures are indicative: the incremental accuracy also depends on the order in which each step is implemented.

Key takeaways

We have learned a lot from this challenge thanks to participants’ shared insights and we hope it gave you food for thoughts as well. Here are our key takeaways:

Thanks a lot for reading up to now and don’t hesitate to reach out if you have any comment on the topic!

BLOG

BLOG