TL;DR

Training a ML model can sometimes be complicated to set-up and replicate:

• It might be done using some code hosted on a notebook on a VM that you have to launch manually and turn off when it’s finished

• You may have to upload a training dataset each time you want to train it again

• You need to deep dive into your code when you want to change a single parameter

• etc.

In this article, we’ll see how we automated the training process of FastAI’s text classifiers, using Google Cloud AI Platform.

In a second article, we’ll see how we managed to deploy such models with AI Platform and TorchServe.

For who?

If you’re working on a project that requires to train ML models multiple times, and you’re tired of having to manually run your trainings, you’ve come to the right place.

If you’re tired of managing VMs for your training and just want your time to be allowed to something more interesting, like reading Medium articles, you’ve also come to the right place!

This article is dedicated to those who want to know how they can gain time and resources by using AI Platform for the training of their ML models. We’ll see in this article how we applied this to a project we worked on, using FastAI.

This article is dedicated to those who want to know how they can gain time and resources by using AI Platform for the training of their ML models. We’ll see in this article how we applied this to a project we worked on, using FastAI.

Pre-requisites if you want to reproduce what we did

AI Platform is part of the Google Cloud Platform suite, as well as the other services we used to automate our training pipeline. Here are the GCP services we used:

Google Cloud SDK, Docker and Nvidia-docker need to be installed and set-up on the machine where the Docker image is built. The point of installing Nvidia-docker is to be able to run the built Docker Image directly on the GPU of the machine (if there is one), to ensure that there are no errors in the code and that the training will run as expected when running on AI Platform.

As we’ll see later in the article, the Docker Image has been created from Nvidia-Cuda docker image, so the required Nvidia drivers are automatically installed when building the image.

Context

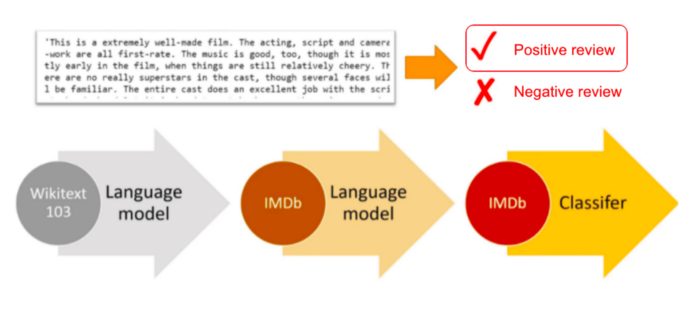

We’ll see in this article how we automated the training of a text classifier made with FastAI, a library allowing users to create powerful models thanks to ULM FiT method.

We already presented it in another Medium article, so I invite you to check it out if you want to know more about it.

Since what we’ll see in this article will be applicable to any framework, you don’t need to be familiar with FastAI to continue reading. All you need to know is that we used a pre-trained model to train our text-classifier in addition to a training labelled dataset.

How we set-up the training with AIPlatform

To automate model training with AI Platform, you need to specify which code should be run in which environment when the training command is called. The best way to do so is to create a Docker Image that contains all the training code and its environment, so AI Platform just has to create a container from this image each time you ask it to train a model. We’ll see in this part how we’ve done it.

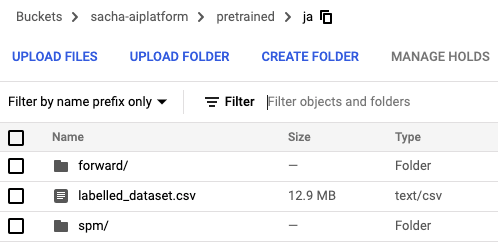

Store all necessary files in a GCS bucket

Before creating our Docker image that contains our training, we had to think about the files that are used during the training of a FastAI text classifier model. We therefore decided to store all the files that were necessary for the training in a GCS bucket, separated in folders for each language, with a specific name given to each file.

We then implemented in our training code (as we’ll see below) a method to retrieve those required files from GCS, by only specifying the target language as argument.

Write the training code

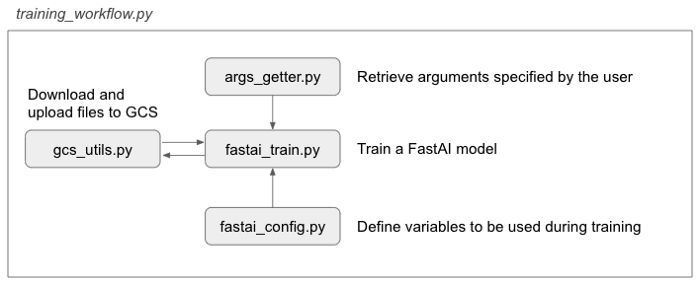

After uploading the necessary files in GCS, we created a repo containing the code for our models training, meant to be stored in a Docker Image later on.

As you can see in the linked repo, we divided the code for the training into separate files to handle properly all the training pipeline.

We defined a file that executes all the training workflow as follows:

fastai_train.py is the only file directly using methods from FastAI, so if someone wanted to deploy another framework for their training, they’d just have to modify this file (and the content of the config file of course).

The next step was then to create a docker image that will contain everything necessary for the training to be run correctly.

Create the Dockerfile

After preparing the training code, a Dockerfile needed to be created and pushed to Google Container Registry, to enable AI Platform to retrieve and execute it in the right environment.

Since the training of our model needed to run on GPU, we imported Nvidia-cuda Docker image to create our own, so all the necessary drivers were already installed.

# Dockerfile

FROM nvidia/cuda:10.2-devel

RUN apt-get update && apt-get install -y --no-install-recommends

wget

build-essential

RUN apt-get update && apt-get install -y --no-install-recommends

python3-dev

python3-setuptools

python3-pip

RUN pip3 install pip==20.3.1

WORKDIR /root

# Create directories to contain code and downloaded model from GCS

RUN mkdir /root/trainer

RUN mkdir /root/models

# Copy requirements

COPY requirements.txt /root/requirements.txt

# Install pytorch

RUN pip3 install torch==1.8.0

# Install requirements

RUN pip3 install -r requirements.txt

# Installs google cloud sdk, this is mostly for using gsutil to export model.

RUN wget -nv

https://dl.google.com/dl/cloudsdk/release/google-cloud-sdk.tar.gz &&

mkdir /root/tools &&

tar xvzf google-cloud-sdk.tar.gz -C /root/tools &&

rm google-cloud-sdk.tar.gz &&

/root/tools/google-cloud-sdk/install.sh --usage-reporting=false

--path-update=false --bash-completion=false

--disable-installation-options &&

rm -rf /root/.config/* &&

ln -s /root/.config /config &&

# Remove the backup directory that gcloud creates

rm -rf /root/tools/google-cloud-sdk/.install/.backup

# Copy files

COPY trainer/fastai_train.py /root/trainer/fastai_train.py

COPY trainer/fastai_config.py /root/trainer/fastai_config.py

COPY trainer/args_getter.py /root/trainer/args_getter.py

COPY trainer/gcs_utils.py /root/trainer/gcs_utils.py

COPY trainer/training_workflow.py /root/trainer/training_workflow.py

# Path configuration

ENV PATH $PATH:/root/tools/google-cloud-sdk/bin

# Make sure gsutil will use the default service account

RUN echo '[GoogleCompute]nservice_account = default' > /etc/boto.cfg

# Authentificate to GCP

CMD gcloud auth login

# Sets up the entry point to invoke the trainer.

ENTRYPOINT ["python3", "trainer/training_workflow.py"]

As you can see above, the Dockerfile executes the following steps to create the image:

Build the image and push it to GCR

After creating the Dockerfile, it was necessary to build the image to push it to GCR. As specified in the repo, various local variables needed to be defined, such as the IMAGE_URI in GCR, the REGION our operates in, etc.

The image was built by running this command:

docker build -f Dockerfile -t $IMAGE_URI ./

Before pushing it to GCR, we wanted to ensure that everything would work fine when calling the training, so since our VM had a GPU available, we ran the image prior pushing it to see what happened:

docker run --runtime=nvidia $IMAGE_URI --epochs 1 --bucket-name $BUCKET_NAME

This step is not necessary but can save you a lot of time because you’ll directly see if there are errors in your code.

We finally pushed the container to GCR by running the following command, $IMAGE_URI being the variable referring to the URI where the image is stored in GCR:

docker push $IMAGE_URI

Run and follow the job

After following the previous steps, the training of the model was ready to be called using a simple command in the terminal. The Google Cloud SDK just needed to be enabled and local variables defined:

gcloud ai-platform jobs submit training $JOB_NAME --scale-tier BASIC_GPU --region $REGION --master-image-uri $IMAGE_URI -- --lang=fr --epochs=10 --bucket-name=$BUCKET_NAME --model-dir=$MODEL_DIR

This command asks AI Platform to retrieve the container in GCR using its IMAGE_URI, and then run the training on the GPU of a machine hosted in the region REGION.

We specified various arguments here, such as the language of the training, the number of epochs, the bucket name to upload and download files in GCS, and the directory where to store the trained model. Those are the arguments that are retrieved by the args_getter.py file

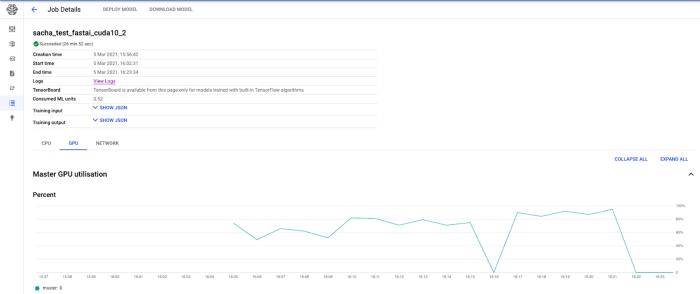

After running the command, the training started and a job was created in the AI Platform console, allowing us to follow the evolution of the training and check the logs of the machine running it.

Many information are accessible when looking at the job in AI Platform console

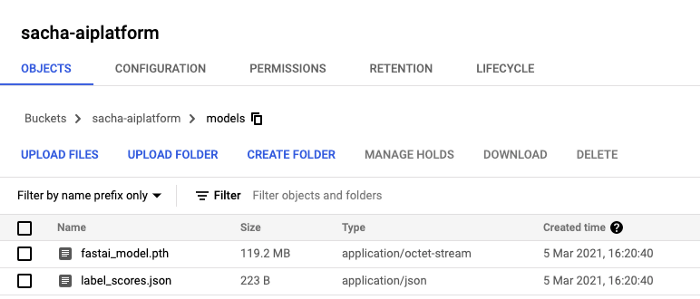

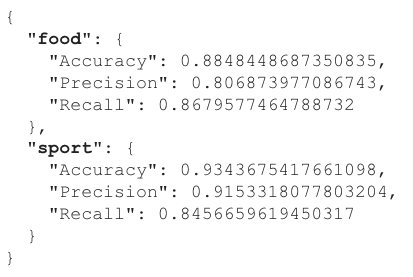

When the job is complete, the trained model is saved on a GCS bucket as a .pth file along with a .json file containing its performance.

We decided to consider the results on labels separately, as if we had a binary classifier for each possible label.

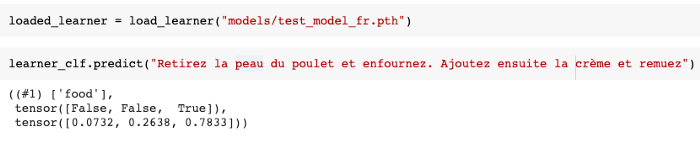

Since we used FastAI, the model file could directly be loaded in any environment by calling FastAI’s load_learner() method.

Key take-aways

Automating the training of our models using AI Platform allowed us to save a lot of time, and made us consider various aspects of our model training to efficiently put it into production.

Here are some take-aways we gathered from this:

What’s next?

Now that we’ve explained how we automated the training of our model, we can now show you how we managed to make it callable easily to classify files on demand.

Stay tuned for the second part of this article that will explain everything you need to know to deploy your trained classifiers using AI Platform and TorchServe!

Thanks for reading!

We hope you’ve learned something today, and that it will be useful for your future ML projects. Feel free to reach us if you have any question or comment regarding this topic.

BLOG

BLOG