Authors

In the era of digital transformation, enterprises continuously accumulate massive data sets with growing scale and complexity.

For enterprises, a data lake is not just a technical means to store different types of data, but also an infrastructure to improve the efficiency of data analysis, support data-driven decision-making, and accelerate the development of AI. However, in real-time processing, streaming data analysis and complex business scenarios (e.g., user behaviour analysis, inventory management, fraud detection), traditional data lake architectures struggle to meet the demand for rapid response.

As a new generation of real-time data lake technology, Apache PAIMON is compatible with Apache Flink, Spark and other mainstream computing engines, and supports streaming and batch processing, fast querying and performance optimization, making it an important tool for accelerating AI transformation.

PAIMON Principles

Apache PAIMON is a storage and analytics system that supports large-scale real-time data updating, and achieves efficient querying through LSM trees (log structure merge tree) and columnar storage formats (such as ORC/Parquet). It is deeply integrated with Flink to integrate change data from Kafka, logs, and business databases, and supports stream and batch streaming to achieve low-latency, real-time updates and fast queries.

Example of PAIMON-based backend data flow architecture

Compared to other data lake frameworks (e.g. Apache Iceberg and Delta Lake), PAIMON uniquely provides native support for unified stream-batch processing, which not only efficiently handles batch data, but also responds in real-time to changed data (e.g. CDC). It is also compatible with a variety of distributed storage systems (e.g. OSS, S3, HDFS) and integrates with OLAP tools (e.g. Spark, StarRocks, Doris) to ensure secure storage and efficient reads, providing flexible support for rapid decision making and data analysis in the enterprise.

Key PAIMON Use Cases

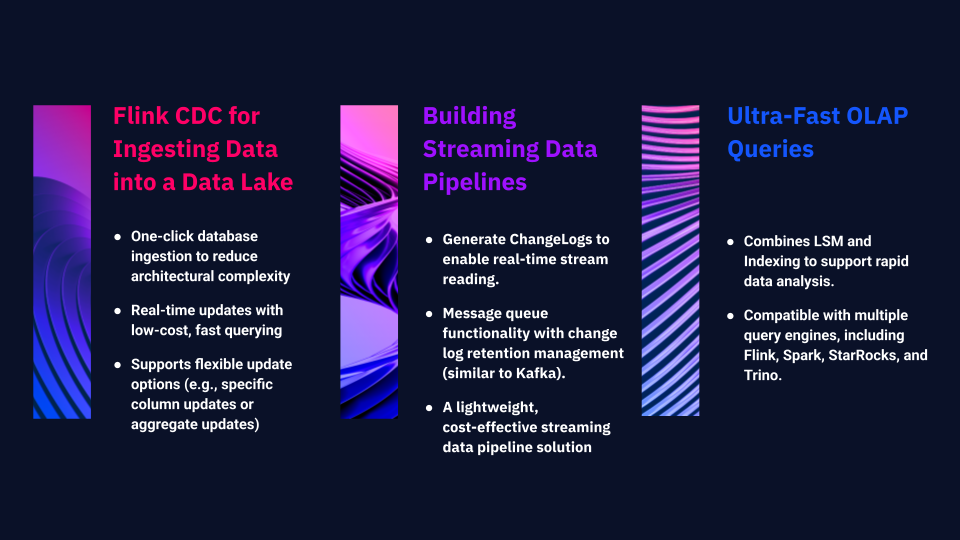

1. Flink CDC for Ingesting Data into a Data Lake

PAIMON simplifies and optimizes this process. With a single click ingestion, the entire database can be quickly imported into the data lake, thus greatly reducing the complexity of the architecture. It supports real-time updates and fast queries at low cost. In addition, it provides flexible update options that allow the application of specific columns or different types of aggregated updates.

2. Building Streaming Data Pipelines

PAIMON can be used to build a complete streaming data pipeline , with capabilities including:

Generate ChangeLog, allowing streaming read access to fully updated records, making it easier to build powerful streaming data pipelines.

PAIMON is evolving into a message queue system with consumer mechanisms. In its latest version, it includes lifecycle management for change logs, allowing users to define retention periods (e.g., logs can be retained for seven days or more), similar to Kafka. This creates a lightweight, cost-effective streaming pipeline solution.

3. Ultra-Fast OLAP Queries

While the first two use cases ensure real-time data flow, PAIMON also supports high-speed OLAP queries to analyze stored data. By combining LSM and Indexing, PAIMON enables rapid data analysis. Its ecosystem supports querying engines such as Flink, Spark, StarRocks, and Trino, enabling efficient queries on stored data within PAIMON.

ARTEFACT Use Cases

Case 1: Enhancing Real-Time Data Analysis Efficiency

Case 2: Building Reliable Real-Time Business Monitoring

The above cases summarize ARTEFACT’s practical experience in implementing Apache PAIMON for clients. As a real-time data lake technology, PAIMON offers enterprises a highly efficient and flexible solution to tackle complex data processing challenges.

BLOG

BLOG