In this article, we will present a tool that demonstrates, practically, our experience using Vertex AI Pipelines in a project running in production.

An end-to-end tutorial on how to train and deploy a custom ML model in production using Vertex AI Pipelines with Kubeflow v2. If you are confused on how to approach Vertex AI, you will be able to find your way as everything in this tutorial is based on real-life experience. There are many examples of pipelines that illustrate how to use certain cool features of vertex AI and Kubeflow. You will also find a makefile to help you run important recipes and save you plenty of time to build your model and have it up and running in production.

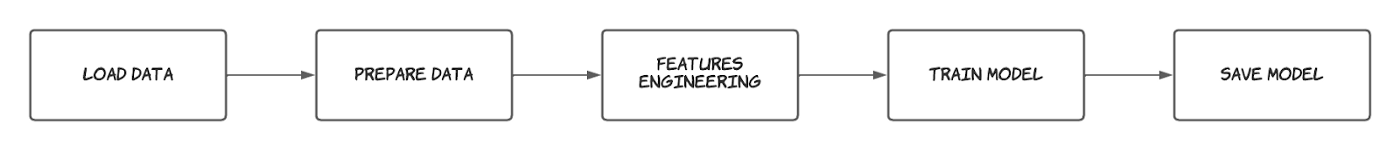

Pipelines in ML can be defined as sets of connected jobs that perform complete or specific parts of the ML workflow (ex: training pipeline).

example of a simple training pipeline

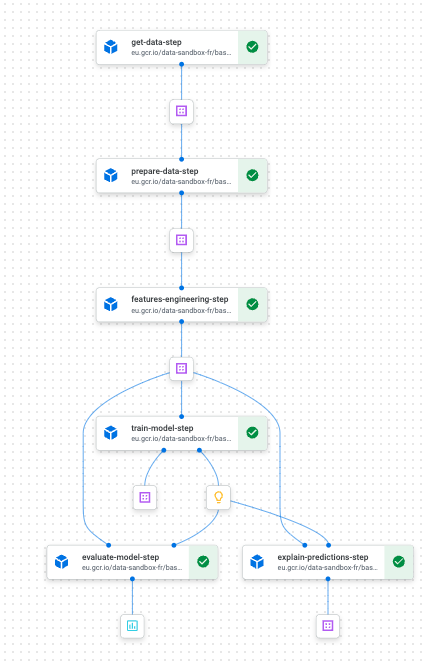

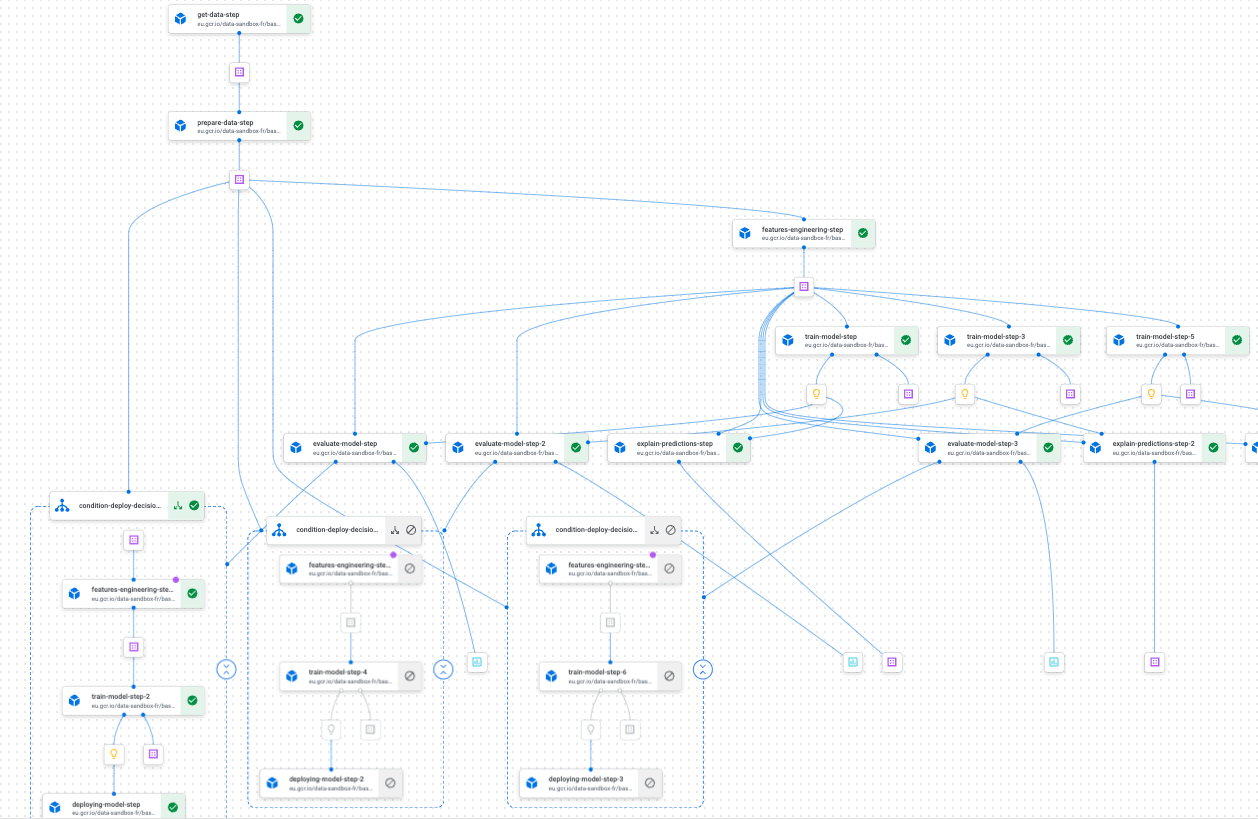

example of a training pipeline on Vertex AI Pipelines using Kubeflow

Designed properly, pipelines have the benefit of being reproducible, and highly customizable. These two properties make experimenting with it and deploying it in production a relatively easy task. Using Vertex AI Pipelines along with Kubeflow helped us rapidly design and run custom pipelines that have the above mentioned properties. The examples of pipelines we illustrate in the starter kit are very representative of what one could encounter when working on a ML project that needs to be deployed in production. We also shared a handful of tips and automated scripts so that you can focus on getting comfortable with Vertex AI.

When I first started using Vertex AI Pipelines, I was quite overwhelmed by all the possibilities to do the exact same task. I was not quite sure about the best choices on how to construct my pipelines. After a few months, we found our way and forged a few convictions, at least on the most important aspect of managing the lifecycle of a project in production with this technology.

As stated earlier, this article aims to present a starter kit that shows, in practical methods, our experience and what we have learned while using Vertex AI Pipelines. We hope this will help new starters to quickly grasp this powerful tool without paying the high entry price.

In the next sections, we will present the most interesting concepts/features we found using Vertex AI Pipelines. We also use a toy forecasting project (the M5 competition) to illustrate everything. We will intentionally not focus on the modeling part but we will instead emphasize the different steps needed to operationalize a model in production.

Build a custom base image and use it as a basis for your components

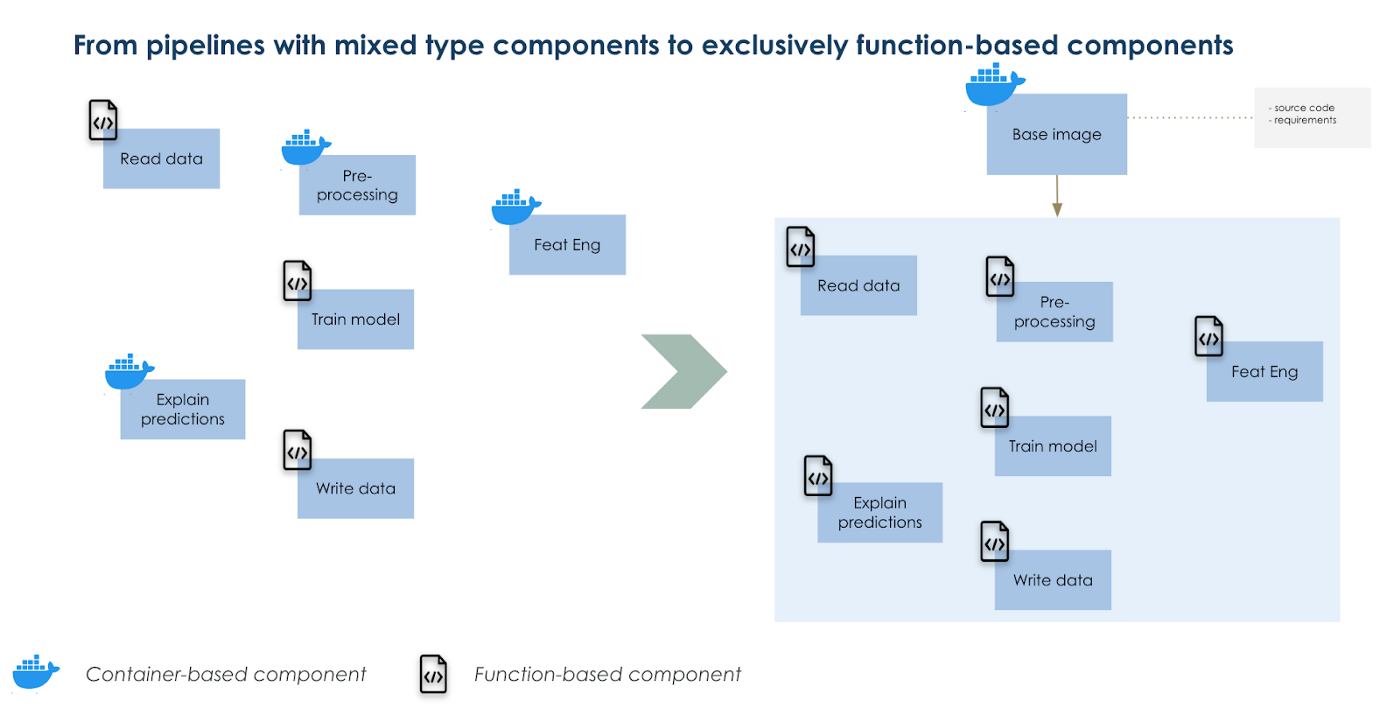

If you have ever worked with Kubeflow pipelines, one question you might have is when to use container based components vs function based ones. There are many pros and cons on both options, nonetheless, there is also a middle that can be found. Container based components are more suitable for complex tasks where there are many code dependencies in comparison to function based components that contain all the code dependencies inside a function and usually are simpler. The latter runs more quickly as we do not need to build and deploy an image every time we edit our code. In function based components, a default python 3.7 image is used to run your function.

Our solution to run both complex and simple components the same way is to work with an overwritten version of the default base image. Inside this altered base image we installed all our codes as a package. Then, we import those functions inside function based components as you would do for pandas for example. We get the benefit of running complex and simple tasks in the same manner and reduce image build time to just 1 (the base image).

We also organize our configuration files in a way that makes it easy to adapt inputs of your components and pipelines.

Using a an overwritten base image as the single foundation for all your components

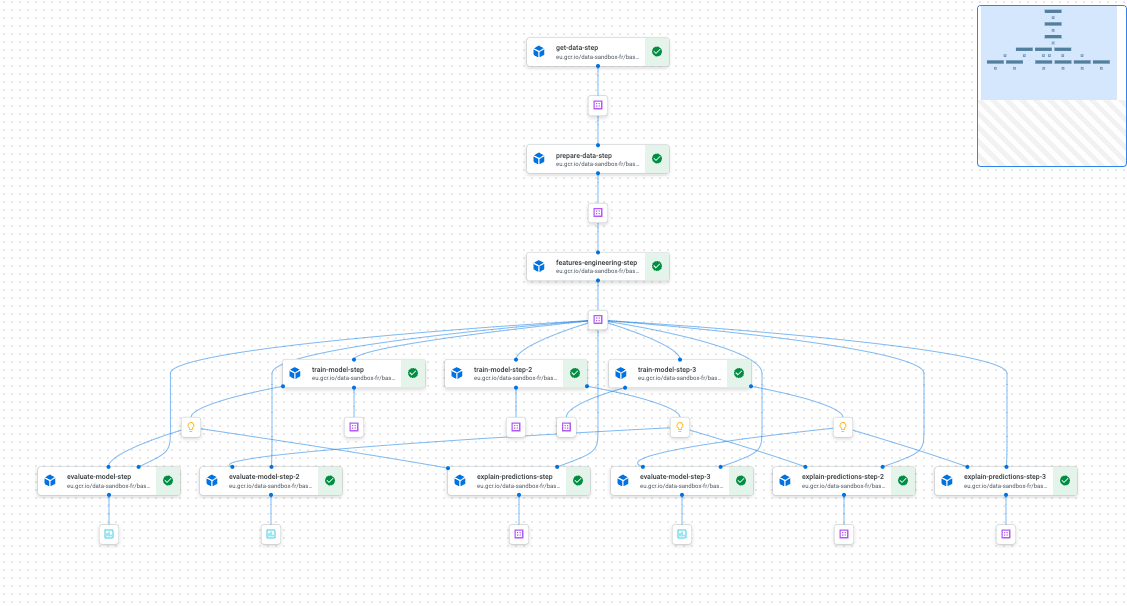

Parallelizing parts of your pipelines is as simple as writing a for loop

When experimenting with ML, we usually need to run many iterations of a simple training workflow either for tuning a hyperparameter or for making multiple models (ex a model by category of product).

Doing this optimally would mean parallelizing the different training workflows to gain time and optimize resources. With Vertex Pipelines and Kubefkow the effort is minimal by design; it will only cost you to write a for loop. And when compiling your pipeline, Kubeflow will figure out which steps and/or group of steps can be run in parallel and which need to be done successively.

Example of a pipeline with parts that run in parallel

Conditional deployment for operating your ML model seamlessly

With kfp.dsl.condition, you can easily deploy a trained model and prepare to reuse it later with some code logic. If you are experimenting with many settings and hoping to seamlessly move things in production given a set of conditions, this functionality of Kubeflow will be very handy. Mix it up with a great CICD, you will operate your ML model lifecycle without a hitch.

Example of conditional deployment

On top of these features (which is not exhaustive), you will have reproducibility , traceability, manageability and last but not least a great UI to monitor everything on the Vertex AI interface on GCP.

Conclusion

Nowadays many ML models are expected to run in production. So if you are working on GCP and planning to use Vertex AI, we hope this starter kit will help you have a pleasant journey with the tool. You should also check it out if you are starting your projects with the ambition of making them useful asap i.e deploying them in production.

Many thanks to Luca Serra, Jeffrey Kayne and Robin Doumerc (Artefact) who helped build this starter kit but also Maxime Lutel for actually doing the modelling for the toy project we use.

If you want to take to the next level, you will find in the documentation of GCP how to:

BLOG

BLOG